I am an Applied Researcher at eBay working on deep neural networks and its applications in buying, selling items on eBay. I work on building ML models to extract useful information from text and infrastructure frameworks that power our machine learning workflows such as automatic training, tuning and evaluating ML models at scale.

Before this, I was a Computer Science MS (specialization in Machine Learning) student at Georgia Tech advised by Prof. Dhruv Batra and worked closely with Prof. Devi Parikh. I worked at the intersection of AI research and software engineering where I focussed on simplifying and standardizing the process of evaluating ML models by developing and managing a widely adopted evaluation platform, EvalAI (MS Thesis), for reproducibility of results, maintaining evaluation consistency, evaluating of model’s code instead of predictions from the model, and to measure constant progress on pushing the frontiers of AI. I am the lead-developer and maintainer for this project.

During my masters, I was awarded the The Marshall D. Williamson Fellowship Award 2021 for academic excellence and leadership.

I also lead an open source organization, CloudCV, (7th year GSoC organization), where we are building several open-source softwares for reproducible AI research such as evalai-cli, visual-chatbot, etc.

Prior to joining grad school, I spent a year as a visiting research scholar in Machine Learning and Perception Lab at Georgia Tech.

Please feel free to reach out to me at rishabhjain2018@gmail.com or rishabhjain@gatech.edu.

Before this, I was a Computer Science MS (specialization in Machine Learning) student at Georgia Tech advised by Prof. Dhruv Batra and worked closely with Prof. Devi Parikh. I worked at the intersection of AI research and software engineering where I focussed on simplifying and standardizing the process of evaluating ML models by developing and managing a widely adopted evaluation platform, EvalAI (MS Thesis), for reproducibility of results, maintaining evaluation consistency, evaluating of model’s code instead of predictions from the model, and to measure constant progress on pushing the frontiers of AI. I am the lead-developer and maintainer for this project.

During my masters, I was awarded the The Marshall D. Williamson Fellowship Award 2021 for academic excellence and leadership.

I also lead an open source organization, CloudCV, (7th year GSoC organization), where we are building several open-source softwares for reproducible AI research such as evalai-cli, visual-chatbot, etc.

Prior to joining grad school, I spent a year as a visiting research scholar in Machine Learning and Perception Lab at Georgia Tech.

Please feel free to reach out to me at rishabhjain2018@gmail.com or rishabhjain@gatech.edu.

News

- [June 2021] Started working as a Research Engineer at eBay.

- [May 2021] Graduated from Georgia Tech with a Master's degree in Computer Science. My thesis is available here.

- [Mar 2021] Awarded The Marshall D. Williamson Fellowship Award 2021 for academic excellence and leadership.

- [Feb 2021] CloudCV selected as a mentoring organization in Google Summer of Code for the 7th time in a row.

- [Sep 2020] Our paper dialog without dialog is accepted to NeurIPS 2020.

- [Jun 2020] Invited speaker at EmbodiedAI workshop. [Talk]

- [May 2020] Interned at eBay with Roman Maslovskis and Uwe Mayer for summer 2020.

- [Feb 2020] Organization Administrator for Google Summer of Code 2020 with CloudCV.

- [Feb 2020] CloudCV selected as a mentoring organization in Google Summer of Code for the 6th time in a row.

- [Oct 2019] Represented CloudCV at Google Summer of Code Mentor Summit 2019 , Munich Germany.

- [Oct 2019] EvalAI accepted in AI systems workshop at SOSP conference.

- [Aug 2019] Joined Georgia Tech for Masters in Computer Science.

- [Jun 2019] Presented EvalAI in Habitat Workshop at CVPR.

- [Mar 2019] Our paper nocaps: novel object captioning at scale is accepted to ICCV 2019.

- [Feb 2019] Served as a Google Summer of Code orgnization administrator with CloudCV.

- [Jan 2019] Team Lead, CloudCV.

- [Nov 2018] Served as a Google Code-In orgnization administrator with CloudCV.

- [Oct 2018] Represented CloudCV at Google Summer of Code Mentor Summit 2018 , Google Sunnyvale.

- [Jul 2018] Joined as a Visiting Research Scholar at Georgia Tech to work with Prof. Dhruv Batra & Prof. Devi Parikh.

- [Apr 2018] Served as a Google Summer of Code mentor with CloudCV.

- [Nov 2017] Served as a Google Code In 2017 Mentor with CloudCV.

- [May 2017] Selected as a Google Summer of Code student with CloudCV.

Projects

EvalAI: Towards Better Evaluation Systems for AI Agents (MS Thesis)

Built an open source platform for evaluating and benchmarking AI models. We have hosted 200+ AI challenges with 18,000+ users, who have created 180,000+ submissions. More than 30 organizations from industry and academia use it for hosting their AI challenges. The project is open source with 130+ contributors, and 2M+ yearly pageviews. Some of the organizations using it are Google Research, Facebook AI Research, DeepMind, Amazon, eBay Research, Mapillary Research, etc. and research labs from MIT, Stanford, Carnegie Mellon University, Georgia Tech, Virginia Tech, UMBC, University of Pittsburg, Draper, University of Adelaide, IIT-Madras, Nankai University, etc. also use it to host large AI challenges like AlexaPrize on it. It's forked versions are used by large organizations such as World Health Organization, Forschungszentrum Jülich (one of the largest interdisciplinary research centres in Europe), etc. for hosting their challenges instead of reinventing the wheel.

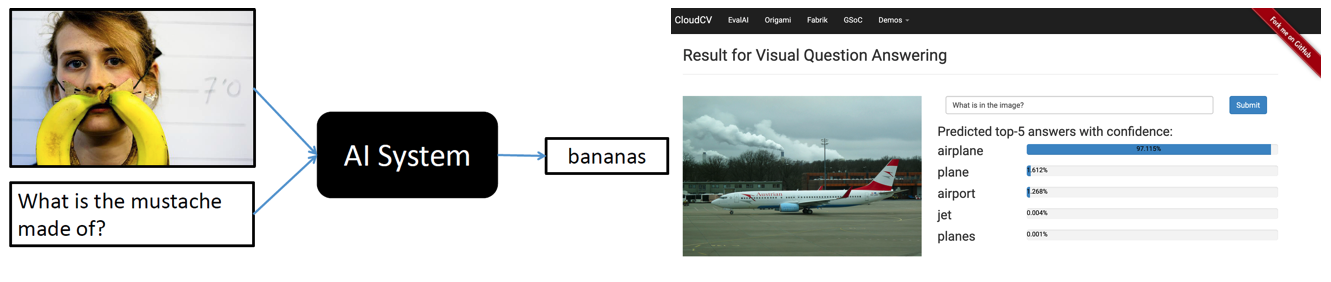

In Visual Question Answering, given an image and a free-form natural language question about the image (e.g., "What kind of store is this?", "How many people are waiting in the queue?", "Is it safe to cross the street?") the model's task is to automatically produce a concise, accurate, free-form, natural language answer ("bakery", "5", "Yes"). This demo is implemented using Pythia model. It is used by 120K+ users.

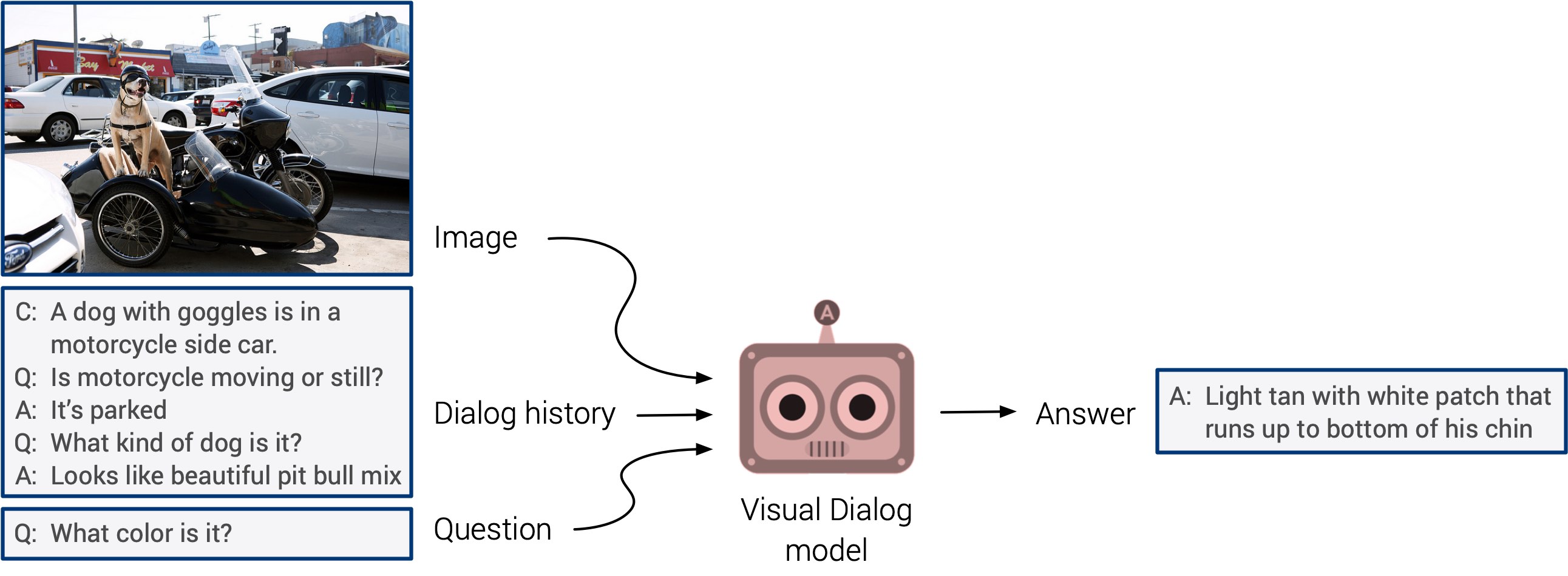

Visual Chatbot: A chatbot that can see!

Built a visual chatbot which can hold a meaningful dialog with humans in natural, conversational language about visual content. Specifically, given an image, a dialog history, and a question about the image, the chatbot will ground the question in image, infer context from history, and answer the question accurately. This demo is implemented using Late Fusion model from CVPR 2017 Paper. It is used by 200K+ users.

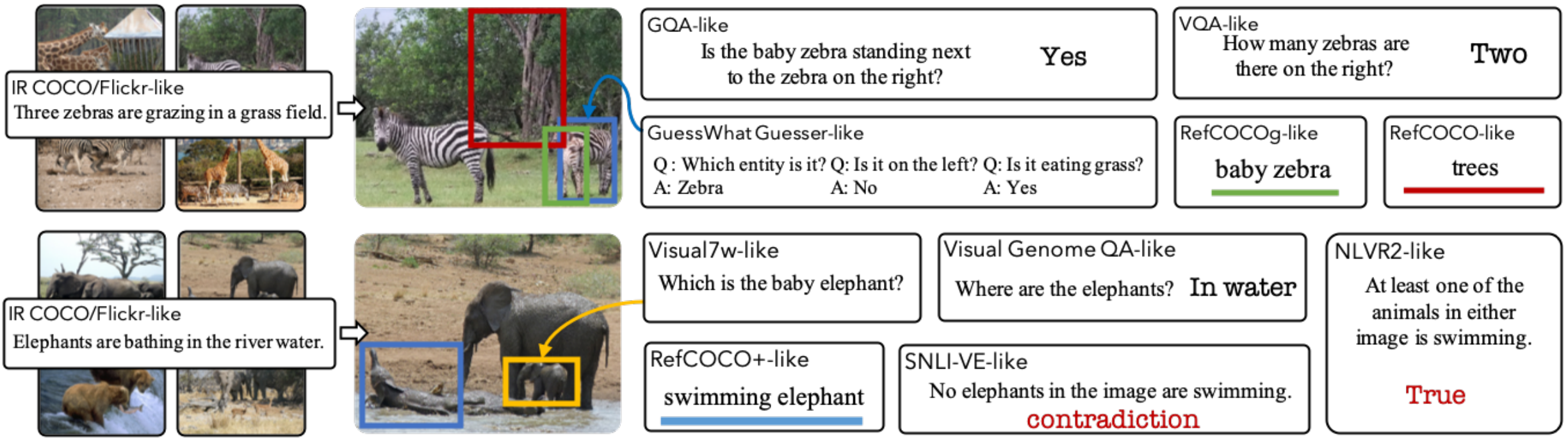

Built a demo of a single model trained on 12 datasets from four broad categories of tasks including visual question answering, caption-based image retrieval, grounding referring expressions, and multi-modal verification and compared to independently trained single-task models, this represents a reduction from approximately 3 billion parameters to 270 million while simultaneously improving performance by 2.05 points on average across tasks. It is used by 30K+ users.

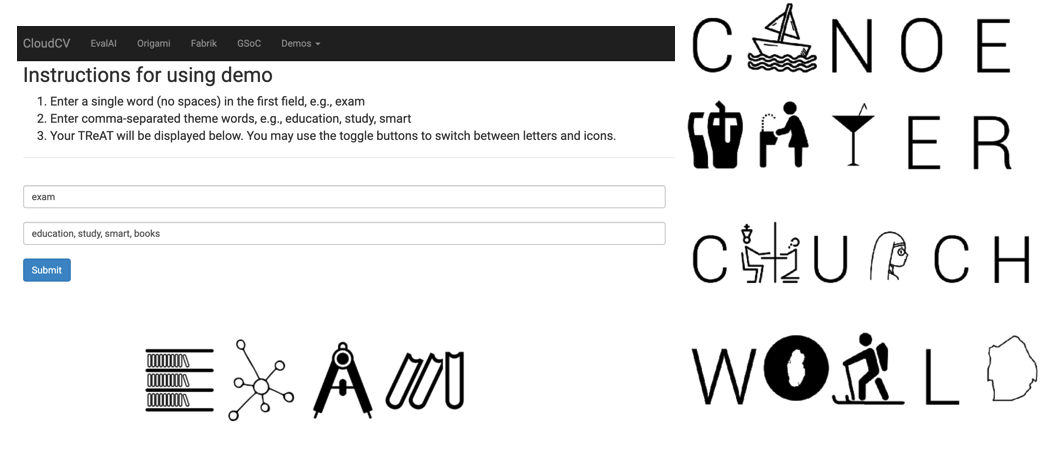

Trick or TReAT: Thematic Reinforcement for Artistic Typography Demo

Given an input word (e.g.exam) and a theme (e.g.education), the individual letters of the input word are replaced by cliparts relevant to the theme which visually resemble the letters - adding creative context to the potentially boring input word.

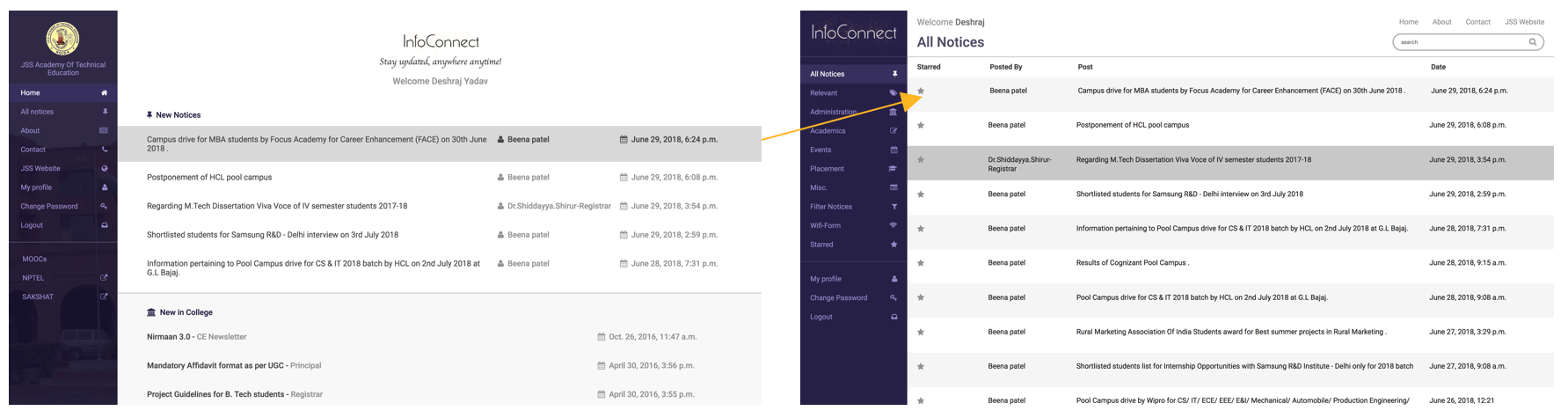

JSS InfoConnect: Information Center for College

Web based officially recognized application that acts as a medium for interaction between all students, faculties and management of JSSATE, Noida. It serves 10K+ requests per day, 20K+ registered users, 20K+ notices/documents were uploaded since it’s launch in 2016.

Publications

Dialog without Dialog: Learning Image-Discriminative Dialog Policies from Single-Shot Question Answering Data

EvalAI: Towards Better Evaluation Systems for AI Agents

nocaps: novel object captioning at scale

(* denotes equal contribution)

Experience

Research Engineer, Structured Data and Applied Research Team

Supervised by: Dr. Roman Maslovskis and Steven Xin

Supervised by: Dr. Roman Maslovskis and Steven Xin (June 2021 - Present)

I am currently working on deep neural networks and its applications in selling items on eBay. I work on building ML models to extract useful information from text and infrastructure frameworks that power our machine learning workflows such as automatic training, tuning and evaluating ML models at scale.

Team Lead

Team Lead (Jan 2019 - Present)

Leading a team of 15+ contributors to actively maintain CloudCV Project which aims to make AI research more reproducible.

Graduate Research Assistant, Machine Learning and Perception Lab

Supervised by: Prof. Dhruv Batra and Prof. Devi Parikh

Supervised by: Prof. Dhruv Batra and Prof. Devi Parikh (Aug 2019 - May 2021)

EvalAI (MS Thesis): Built an open source platform for evaluating and benchmarking AI models. We have hosted 200+ AI challenges with 18,000+ users, who have created 180,000+ submissions. More than 30 organizations from industry and academia use it for hosting their AI challenges. The project is open source with 130+ contributors, and 2M+ yearly pageviews. Some of the organizations using it are Google Research, Facebook AI Research, DeepMind, Amazon, eBay Research, Mapillary Research, etc. and research labs from MIT, Stanford, Carnegie Mellon University, Georgia Tech, Virginia Tech, UMBC, University of Pittsburg, Draper, University of Adelaide, IIT-Madras, Nankai University, etc. also use it to host large AI challenges like AlexaPrize on it. It's forked versions are used by large organizations such as World Health Organization, Forschungszentrum Jülich (one of the largest interdisciplinary research centres in Europe), etc. for hosting their challenges instead of reinventing the wheel.

GuessWhich: Evaluating the role of interpretable explanations towards making a model predictable to a human. We studied if the textual or visual explanations from an AI model in the context of an interactive, goal-driven, collabora-tive human-AI task help humans to predict it’s behavior.

Software Engineering Intern, Structured Data and Applied Research Team

Supervised by: Dr. Roman Maslovskis and Dr. Uwe Mayer

Supervised by: Dr. Roman Maslovskis and Dr. Uwe Mayer (May 2020 - Aug 2020)

Evaluating and Predicting attribute values in listings: Given an image, and a text description about the listing on eBay, the task is to predict the missing attributes in the listing. For instance, predicting the missing color or brand attribute in the listing. I built an end-to-end system for processing visual and textual data along with training AI models. I also trained an early fusion model of image and text data which outperformed the uni-modal models of image and text by 5% and 22% on the test dataset for color attribute and by 20% and 4% on the test dataset for brand attribute.

Visiting Research Scholar, Machine Learning and Perception Lab

Supervised by: Prof. Dhruv Batra and Prof. Devi Parikh

Supervised by: Prof. Dhruv Batra and Prof. Devi Parikh (Aug 2018 - June 2019)

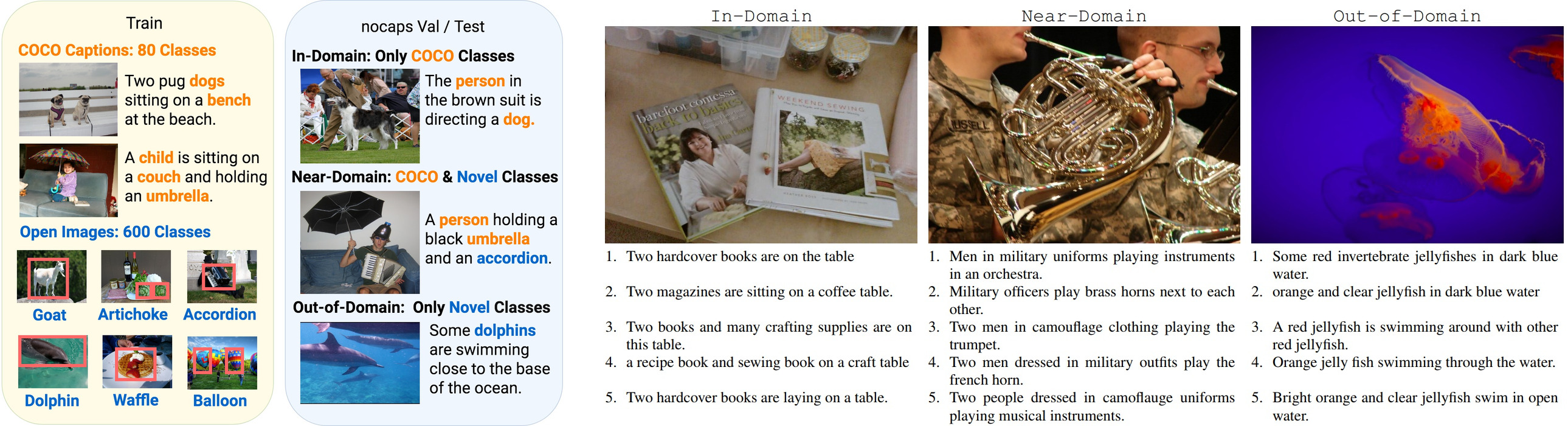

To encourage the development of image captioning models that can learn visual concepts from alternative data sources, such as object detection datasets, we present the first large-scale benchmark for this task. Dubbed nocaps, for novel object captioning at scale, our benchmark consists of 166,100 human-generated captions describing 15,100 images from the Open Images validation and test sets containing more than 500 objects, out of which more than 400 objects are never described in COCO captions dataset.

Google Summer Of Code (GSoC)

Organization Administrator (2020, 2019), Organization Mentor (2018), Student Developer (2017)

Organization Administrator (2020, 2019), Organization Mentor (2018), Student Developer (2017) (May 2017 - Aug 2020)

2017:

I was selected as GSoC student where I developed new features for hosting AI challenges in streamlined manner, implemented REST-API’s, frontend and several analytics features for both participants and hosts in EvalAI.

2018: Mentored a student to design a command line tool (EvalAI-CLI) for EvalAI which lets the participants to install and use EvalAI as a python package.

Google Code In (GCI)

Organization Administrator (2019, 2018), Organization Mentor (2017)

Organization Administrator (2019, 2018), Organization Mentor (2017) (Nov 2017 - Jan 2020)

2017: Applied with CloudCV as a mentoring organization and got it accepted to mentor for the first time in Google Code-In. I mentored high school students on open-source projects in frontend, backend and DevOps.

2018: Led a team of 10+ mentors to mentor high school students on open-source projects EvalAI, Fabrik and, Orgami.

2019: Led a team of 10+ mentors to mentor high school students on open source projects EvalAI, EvalAI-CLI, EvalAI-ngx.

Python Software Society of India (PSSI)

iAugmentor Labs

Software Developer Intern, Machine Learning

Software Developer Intern, Machine Learning (Jun 2016 - Aug 2016)

Developed a classifier using SVM to detect and classify videos containing an answer of a job interview question as an input and outputs a confidence score on the smiling behaviour of a person in the video.

Nibble Computer Society (NCS)

Organizing Member

Organizing Member (Feb 2015 - May 2018)

Organized multiple code labs, seminars, workshops on OOPs, Advanced C, C++, Google Summer of Code, Git etc.and mentored 30+ undergraduate students on software development. I was also responsible for organizing college’sannual techno-cultural fest Zealicon.

Invited Talks

- [Jun 2020] Invited speaker at EmbodiedAI workshop. [Talk]

- [Oct 2019] Represented CloudCV in Google Summer of Code Mentor Summit at Munich Germany. (Slides)

- [Jun 2019] Presented EvalAI in Habitat workshop at CVPR. (Slides)

- [Oct 2018] Represented CloudCV in Google Summer of Code Mentor Summit at Google Sunnyvale. (Slides)

Education

Masters in Computer Science

Georgia Institute of Technology, Atlanta, USA(Aug 2019 - May 2021)

- Specialization in Machine Learning

- GPA: 4.0/4.0

Bachelor of Technology in Computer Science and Engineering

JSS Academy of Technical Education, Noida, India(Aug 2014 - May 2018)

- Passed with an aggregate of 80.4% (with Hons.)

- Class Rank: 8th out of 150 Students (or top 5%)